In an era when AI is rapidly changing the world, LlamaCon 2025 has become a key platform that gathers voices from experts and the developer community to share the latest AI visions and innovations, especially with Llama models This is an open source AI model that has become highly popular in the past two years. This article will explore the concepts and technologies unveiled at the event, along with analyzing the importance and direction of AI in the upcoming future.

The beginnings of Open Source AI and past challenges

Two years ago, The concept of open AI is also seen as far-fetched and risky. Many people question the financial viability and safety of exposing resource-intensive AI models, especially concerns that opening up the model could lead to the technology being misused or harmful.

Meta's Chris Cox said of these challenges: "People say we're crazy to invest in training models and give them away for free," but Meta itself used to be a startup that relied on open source as the foundation for technology development, so belief in the power of community and shared development is a key driver.

The interesting thing is that at present. The concept of open source AI is widely accepted at the government and technology industry levels because of its advantages of transparency, verifiability, and ability to be effectively customized to suit specific applications.

Llama's Success and Community Support

Llama 4 is a major turning point that shows how open source AI models can compete with closed models, with an astonishing 1.2 billion downloads in just ten weeks after launch.

In addition to the main model, there are also Derivative models Derivative models are created by developers to meet specific needs, such as localization or use in specific applications.

This success is made possible by partnerships with a wide range of partners, which allows users to easily and flexibly get started with Llama through a modular stack, allowing developers to choose only the right parts for their work.

Top features of Llama 4

- MOE (Mixture of Experts) Model: An optimized sparse architecture that enables only a portion of the model. This results in high performance in a smaller model size.

- Multimodal: Supports both text and image input in the same model. Make it faster and more accurate for tasks that require joint image and text analysis.

- Multilingual: It supports up to 200 languages and uses dozens of times the language tokens from the previous generation.

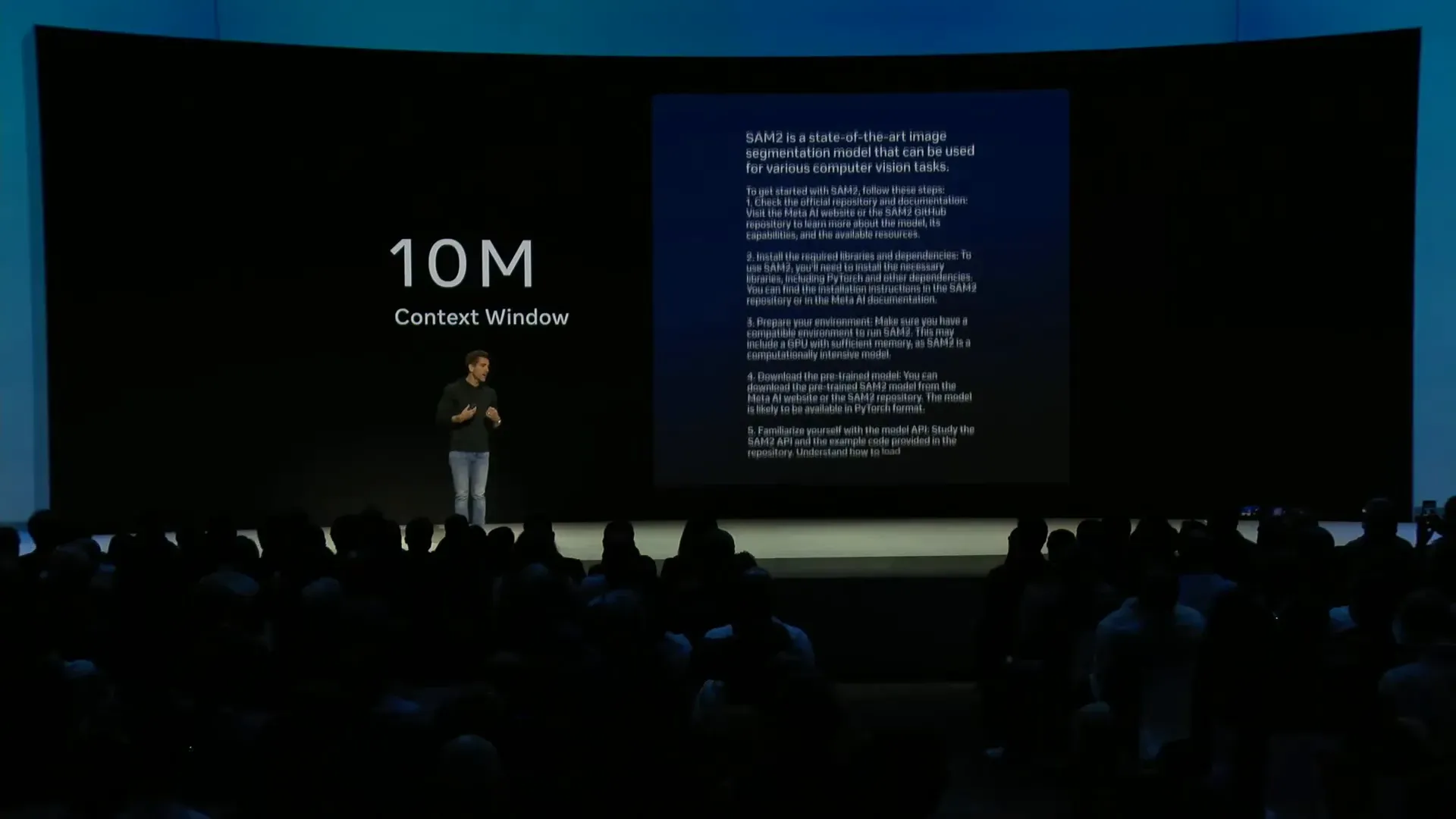

- Large Context Window: It can put a lot of information in context, such as entire program code or even US tax laws.

- High Efficiency and Small Size: The smallest Scout model can run on a single H100 layer of hardware, and the Maverick model at 17 billion parameters runs on 8 GPUs, making it suitable for practical use in Meta AI products.

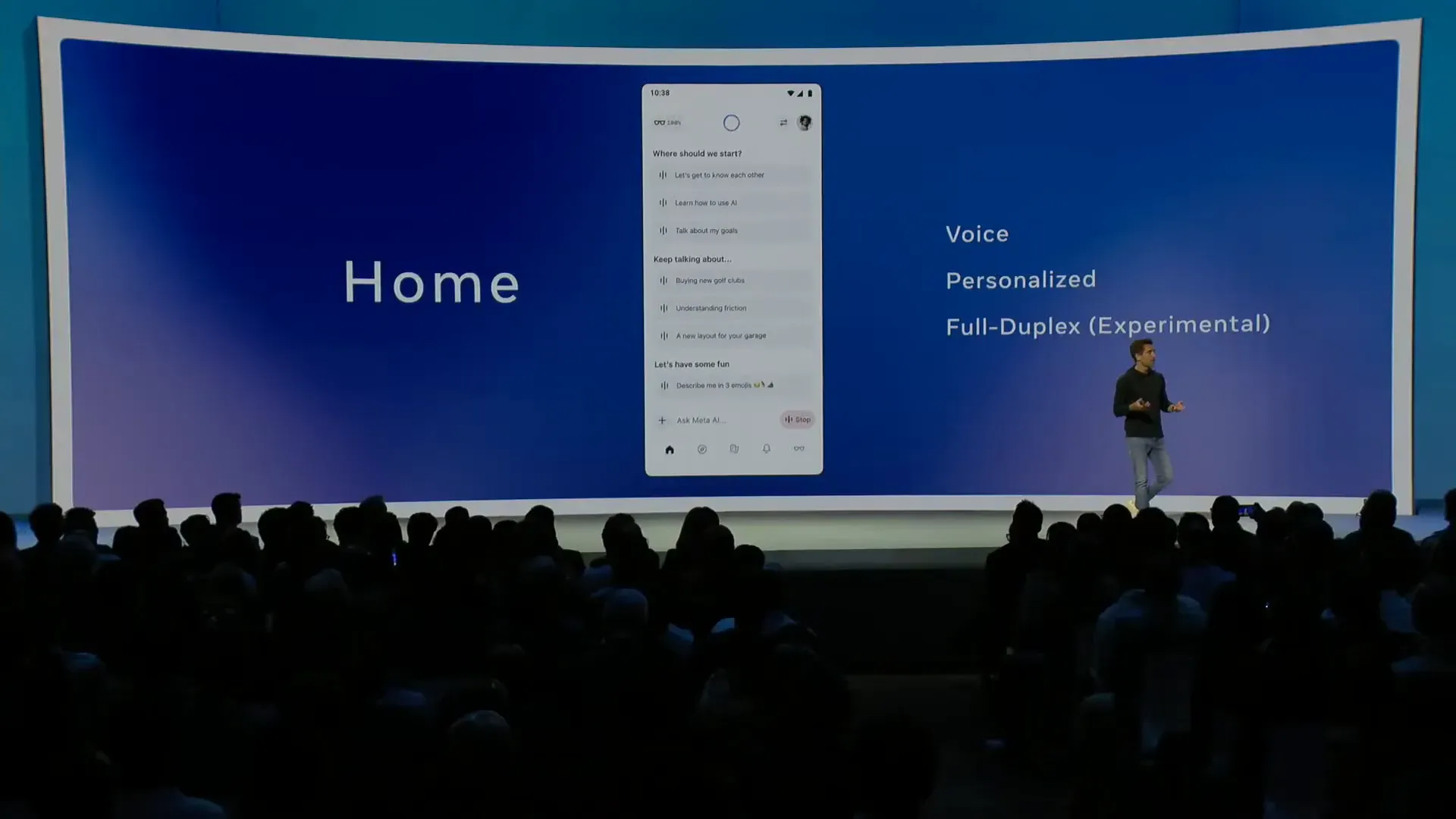

Meta AI App and a natural audio experience

Meta has launched a new AI app that focuses on a voice experience that communicates naturally and quickly. It can be connected to Facebook and Instagram accounts to tailor responses based on personal interests and memories, such as family members' names or birthdays.

Notable features are: full duplex voice It provides a two-way conversation like a real phone, as well as laughter and conversation intervention, which makes interacting with the AI feel more natural and enjoyable. Although this feature did not initially support the use of tools or web searches, it opened up the potential of new technologies.

The app also supports sharing prompts and creative works such as artwork or program code to create a creative and collaborative learning community.

Integration with Ray-Ban AI Glasses

Ray-Ban AI Glasses are a highly popular multimodal AI device. Questions about what is seen through the lens can be asked quickly with voice. The Meta AI app is designed to be consistent and work seamlessly with these devices.

Llama case studies and implementations

The use of Llama in real-world situations is varied and impressive, such as:

- Applications on the International Space Station: Booz Allen and the ISS use Llama 3 to help astronauts find information from a large number of documents and manuals. without relying on connection to the outside world.

- Medical and Health Sector: Sofia in Latin America and Mayo Clinic use Llama to reduce paperwork time and help diagnose diseases, especially in radiology.

- Agriculture in East Africa: Farmer Chat provides farmer information and guidance with localization and localized language support.

- Used car market in Latin America and the Middle East: Kavac uses Llama to support its customers through customizing a model that is suitable for the region.

- Large Business: AT&T uses Llama to analyze customer conversation logs to identify issues and priorities for bug fixes.

APIs and customization of Llama models for the job

Meta has launched Llama API Quick and easy to use It can start with just one line of code. It supports Python and TypeScript languages, and is easily compatible with the OpenAI SDK.

Key features of the API include:

- Supports multimodal input, such as images and text in the same request.

- JSON schema support for structured responses

- Support for tool calling allows the model to automatically run optional tools (in preview state).

- Prioritize security and privacy The data sent through the API is not used to train the model.

Model Customization ( fine tuning ) is another strength of Llama API that gives the user full control over the child model. You can download your own trained model and use it anywhere. No need to log in to Meta's servers.

Model Customization Process

- Open the Fine tuning tab and start a new job.

- Upload data for training, or use data that has already been uploaded.

- Choose to set aside a portion of the dataset for evaluation.

- Name and set different parameters.

- Track your training progress through real-time loss displays.

- When the training is finished, Models can be downloaded for manual use, or run via the Llama API.

After training Models can be evaluated using a graders system that measures performance in areas of interest, such as factuality, and detailed reports.

Model Service and Inference

One of the key challenges of implementing AI is to provide efficient and low-cost models. MOE (Mixture of Experts) that is enabled for only a part of the parameters. This reduces resource consumption and increases speed.

In addition, techniques are used. FP8 quantization This reduces model size and makes it easier to run on single hardware, such as on an H100 server.

Llama's new inference stack also adds speculative decoding features that use small models to draft answers in advance. This makes it 1.5-2.5x faster to generate answers, as well as the paged KV technique to handle very long questions.

Recently, Meta announced a partnership with Cerebras and Grok to bring advanced hardware to support the Llama API, speed up service and help developers with pre-scaling trials.

Tools and technologies in the eyes of FAIR for AI in image perception

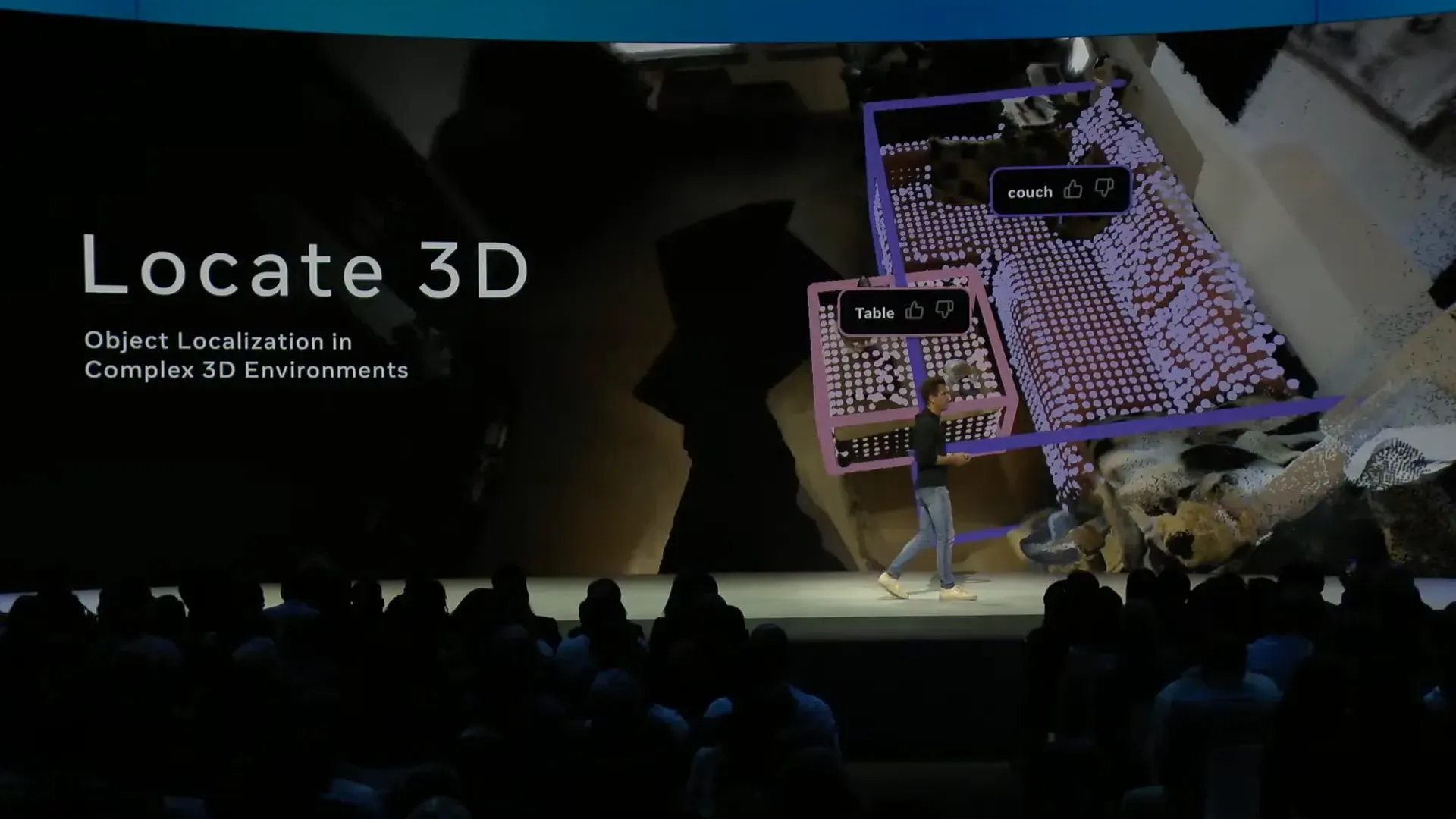

In addition to large language models, Llama has developed tools for image and video perception AI, such as Locate3D, which helps identify boundaries and objects in a three-dimensional environment with text commands, which are ideal for creating datasets and developing applications in the virtual world.

These tools use the Perception Encoder, which is a flexible and portable visual encoder, such as: SAM 2 It is the world's best object detection system, and has been very popular in the science and creative community, such as Instagram Edits, which has been launched around the world.

The good news is that SAM 3 coming this summer will be a huge advancement.

Real-world experience and application of AI with families

Chris Cox talks about his 10-year-old son's trial of the Meta AI app, starting by asking him general news that he is not interested in, but when it comes to Dungeons and Dragons (D&D), his son's favorite game, his interest immediately shifts because the AI can answer specific questions about D&D in a deep and continuous way, reflecting the potential of AI to adapt to each user's specific interests.

A Conversation Between Mark Zuckerberg and Ali Ghodsi: The Vision and Trends of Open Source AI

During the Fireside Chat between Mark Zuckerberg and Ali Ghodsi, the founder of the Databricks Ali highlighted the rapid growth of high-performance small models such as the 70 billion Llama 3.3 model with performance equivalent to the larger generation in the past year.

Ali gives examples of various customer successes, such as: Crisis Text Line It uses Llama to accurately detect the risk of callers in crisis situations and provide assistance, as well as the use in financial markets that makes financial inquiries in plain English instead of complex query language.

Key innovations and techniques that help drive AI

- Mixture of Experts (MOE): It improves efficiency and reduces costs by implementing some parameters at a time.

- Increased Context Length: It allows the model to process large amounts of data in a single context.

- Reinforcement Learning on Customer Data: The technique that Databricks calls TAO allows the model to better understand and reason on customer-specific data.

- Distillation: Extraction of knowledge from large models to small models suitable for specialized applications.

Ali emphasizes that small models that are properly distilled will become more popular than large models because of their speed and lower cost, making them suitable for low-latency tasks such as real-time coding assistance.

Mark Zuckerberg and Ali also talked about the importance of voice in interacting with AI in the future, which will become more mainstream interfaces, especially with wearable devices such as AI glasses that provide natural and continuous communication.

Open Source AI and the Future of the Industry

Both executives agreed that open source AI will be the heart of future innovation because it reduces costs. Ali said that many universities feel constrained by closed models, delaying research and development.

Open source AI helps build a strong community and stimulate knowledge exchange. This leads to the development of models with various capabilities and different applications.

Model Blending and Customization

In addition to using the main model, Developers can also combine and distill models from different sources to create models that are suitable for specific tasks, such as adding reasoning capabilities from one model to a Llama model.

Reinforcement learning and distillation techniques are becoming increasingly popular because they allow models to be more accurate and better suited to specific tasks at a lower cost.

Safety and reliability

Safety is a priority, especially when using models from foreign sources or models that have been customized.

Tools like LlamaGuard and Code Shield developed by Meta are examples of the model's security and reliability efforts.

The Diversity of AI Agents and Human Roles

In the future, there will be AI Agents Many of them have been developed to meet the needs of businesses and specific users, such as small businesses that need AI to take care of customers through WhatsApp or other applications.

This diversity is necessary so that AI can meet the specific needs of each organization. Without relying on a single AI that may not be suitable for every situation.

However, humans still play an important role in regulating, supervising, and monitoring. AI Agents These ensure that AI decisions are accurate and appropriate.

A Guide for Modern-Age AI Developers

Mark Zuckerberg and Ali Ghodsi both emphasized that AI is still in the early stages (Day Zero) of a new era full of undiscovered opportunities and innovations.

The most important thing is to have Data Advantage Because AI gets better at getting the right specific information for the job, creating a flywheel that stores data and continuously improves the model will give it a competitive advantage.

In addition, creating test datasets (evals) and performance metrics that are suitable for production will help developers know how well their models are performing without having to manually check every answer.

Finally, there is the courage to experiment and create new ideas, because the AI world is still open to innovations that have not yet occurred and no one has fully imagined them.

Technical terms you should know

- MOE (Mixture of Experts): A sparse AI model architecture that enables some experts in the model, reducing resource consumption and increasing efficiency.

- Distillation: The process of transferring knowledge from a large model to a small model so that the small model has similar capabilities in a specific task.

- Fine tuning: Customizing AI models with specific data to suit their intended applications

- Speculative decoding: The technique of accelerating the generation of answers using a small model helps to draft the answer in advance for the main model.

- Full duplex voice: Voice communication that opens two channels at the same time. This makes it possible to interact like a real phone conversation.

Conclusion from Insiderly

- The launch of Llama 4 and the Llama API at LlamaCon 2025 is a major milestone that confirms that open source AI is not only an option, but is becoming the heart of future AI innovation.

- With MOE architecture that improves efficiency and reduces costs, multimodal input support, and full model customization capabilities, Llama meets a wide range of applications. From mobile applications to large-scale enterprise applications.

- Collaboration with partners and development of high-performance inference systems Expand your ability to deliver fast and secure AI services. Meanwhile, voice support and wearables open up new opportunities to interact with AI.

- From the perspective of developers and organizations. Having advantageous data and the ability to fine-tune models based on specific tasks is key to pushing the limits of traditional AI and creating real-world experiences in the business and everyday worlds.

- Lastly, the fact that AI will not be just a single assistant, but a variety of AI agents that meet the unique needs of each business and user is a reflection of the future in which AI will become a part of life and work.

Therefore, it is the best time for developers and organizations of all sizes to start innovating and experimenting with open AI to step into a new era of limitless innovation.