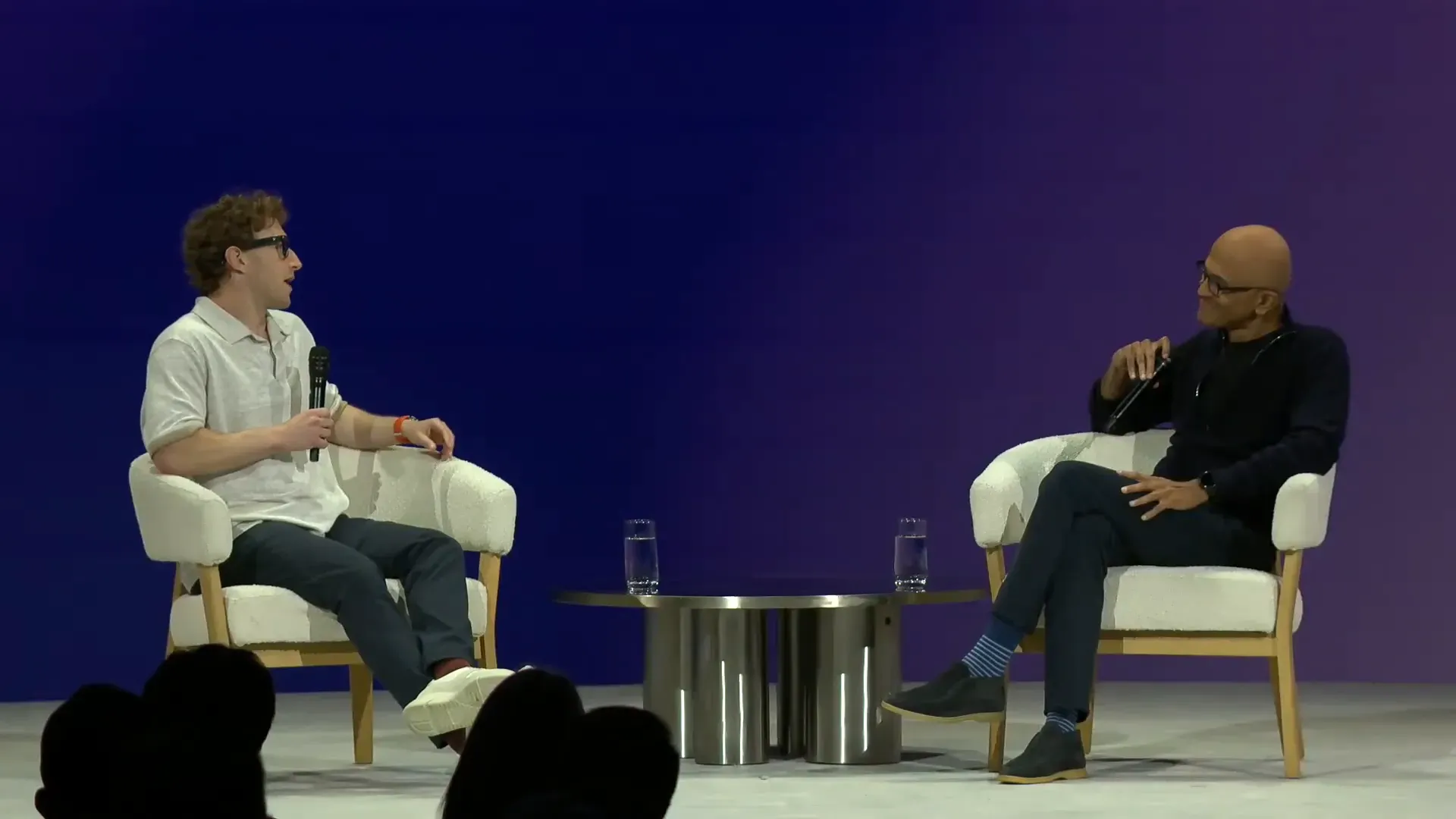

At the event LlamaCon 2025 Closing Period There was a very interesting discussion between Meta founder Mark Zuckerberg and Satya Nadella The president and CEO of Microsoft shared their perspectives and experiences on the growth of TO Infrastructure development and how AI is changing the way software works and develops in the modern enterprise. This article will take you through the key points that the two global technology leaders exchanged at this critical moment.

Evolution of technology and AI with major changes in the past

Satya Nadella Let's start by reflecting on the history of technology from the client-server era to the web. Mobile, cloud, and now is the era of TO He sees each era as a major transition that requires the creation of a completely new system from the ground up. Especially in terms of cloud infrastructure that needs to be adjusted to meet the needs of training. AI with different job characteristics than traditional systems, such as data parallel synchronous training that is different from Hadoop.

Mark Zuckerberg added that the development of AI in each generation is rapidly increasing in efficiency and has resulted in a huge increase in usage. He gave the example of the acceleration of Moore's Law, which seemed to have stalled, but was re-energized by development at many levels, including hardware, software, and new model designs.

The Role of Open Source and the AI Ecosystem in the New Age

One of the points Satya emphasizes is the importance of open source and open collaboration, where he sees the world needing both a closed and open model to provide comprehensive customer service. Azure It can provide a full range of SQL Server, Postgres, Linux, and Windows databases.

Satya points out that open source has a huge advantage in terms of allowing customers to train and moderate models that are their own intellectual property. This is important in sectors that require high privacy and control. In addition, supporting open source helps to create a more diverse and resilient ecosystem.

Infrastructure and tools for today's AI developers

Microsoft has invested heavily in AI-enabled infrastructure in both computers, computers, and computers. Storage Network and AI Accelerator to provide a one-stop service for developers. In addition, Foundry server apps have been created that help manage services such as search, security, and memory, making it easier for developers to use these tools and services.

GitHub Copilot Microsoft emphasizes a combination of code compilation, chat, and agent functionality ( Agentic Workflow To ensure the most fluid and efficient coding, Satya points out that integrating AI into developers' existing workflows is key to increasing productivity.

AI in Software Development and Changes in the Way It Works

Satya talks about the evolution of AI in software development, starting with code completion, expanding to chat conversations, and most recently, agent systems that can delegate tasks and do tasks for them. He also said that the proportion of code written by AI within Microsoft is around 20-30% and is increasing, especially in the area of code reviews where AI plays an increasing role.

Mark Zuckerberg added that while the statistics are still primarily in the direction of auto-code completion, Meta's team is experimenting with AI in specialized tasks such as ad ranking and developing AI engineers to help accelerate the development of the Llama model.

The concept of "Distillation Factory" and the integration of multiple AI models

One of the topics that the two discussed in depth was the concept. “ Distillation Factory This means refining large models into smaller, more capable models, which will allow these models to be deployed more easily and save resources.

Satya explained that creating an infrastructure that allows users to refine models and create task-specific models on their own will be an important step that will allow each organization to quickly and efficiently tailor AI to their needs.

Mark Zuckerberg gave the example of the Maverick model, which is a multimodal model (supports both text and images) with high performance in a smaller size than other models, and said that large models like the Behemoth are suitable for distilling into small models that are practical in the business sector.

The Future of AI: Hybrid Models and Multi-Model Interoperability

Both sides agreed that the future of AI will be a combination of a variety of models, such as the MOE (Mixture of Experts) that combines the capabilities of multiple models to achieve both speed and a high level of accuracy.

Creating applications that use multiple models together will be an important step towards using AI that is more flexible and better suited to complex tasks. Especially in terms of orchestration that allows each AI to communicate and coordinate effectively.

A Perspective on the Future of AI and the Challenges of Change

Satya Nadella emphasized that AI is a new factor of production that is needed for the world in today's era. To increase productivity in all sectors from health, Retail to general knowledge work. However, this change requires time and profound modifications to the system.

He gave the example of a history where it took decades for electrical technology to truly change the way it was produced, as well as AI, which was not just about technology, but also about changes in software and organizational management.

Satya also encouraged developers and stakeholders to embrace AI and create new solutions to solve unsolved problems in the world.

Conclusion from Insiderly

The conversation between Mark Zuckerberg and Satya Nadella at LlamaCon 2025 reflects a major shift in AI technology that is not just about models or hardware, but revolutionizing the entire ecosystem, software, and the way humans work.

Combination of open and closed models Developing flexible infrastructure, and creating tools that help developers work efficiently. is at the heart of driving AI to widespread adoption.

The "Distillation Factory" concept allows for large-scale models of high complexity. It is distilled into a small, easy-to-use, resource-efficient model that will give many organizations the opportunity to quickly create business-specific AI that meets their business needs.

last The real challenge is to change the way organizations work and manage to cope with new technologies. Seeing AI as a new factor that must be integrated into the system and corporate culture will be the key to unlocking the true potential of AI.

For developers and enterprise leaders This is the time to be creative and adapt to move into a future where AI will be a powerful tool to solve problems and increase efficiency in all aspects of life and business.

Technical terms to know

- AI Accelerator: Specialized hardware that accelerates the processing of AI tasks such as GPUs or TPUs.

- Distillation: The process of refining large AI models into small, high-performance models

- MOE (Mixture of Experts): An AI model consisting of several sub-models that specialize in various specific tasks.

- Agentic Workflow: The operation of an AI system that can independently assign tasks and work on behalf of humans.

- Orchestration: Manage and coordinate between multiple systems or models to work together effectively.